How Recommendation Systems Narrow Our Worldview

1. Do Algorithms Have “Preferences”?

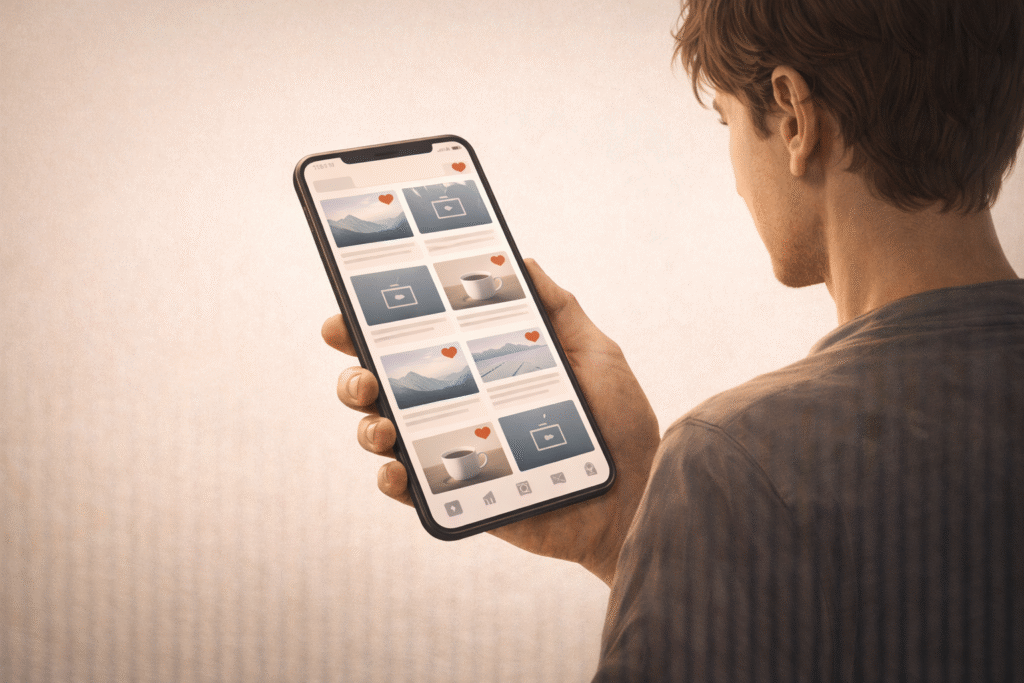

We interact with recommendation algorithms every day—on platforms like YouTube, Netflix, and Instagram. These systems are designed to show us content we are likely to enjoy. At first glance, this seems helpful and efficient.

However, the problem lies in the assumption that these recommendations are neutral. They are not.

Algorithms analyze what we click on, how long we watch a video, which posts we like, and what we scroll past. Based on these patterns, they decide what to show us next. Over time, certain interests and viewpoints are repeatedly reinforced.

In effect, the algorithm behaves like a well-meaning but stubborn friend who keeps saying, “You liked this before, so this is all you need to see.”

2. Filter Bubbles and Echo Chambers

As recommendations repeat, a phenomenon known as the filter bubble begins to form. A filter bubble refers to a situation in which we are exposed only to a narrow slice of available information.

For example, if someone frequently watches videos supporting a particular political candidate, the algorithm will prioritize similar content. Gradually, opposing viewpoints disappear from that person’s feed.

When this filter bubble combines with an echo chamber, the effect becomes stronger. An echo chamber is an environment where similar opinions circulate and reinforce one another. Hearing the same ideas repeatedly makes them feel more certain and unquestionable—even when alternative perspectives exist.

3. How Worldviews Become Narrower

The bias built into recommendation systems affects more than just the content we consume.

First, it strengthens confirmation bias. We are more likely to accept information that aligns with our existing beliefs and dismiss what challenges them.

Second, it reduces diversity of exposure. Opportunities to encounter unfamiliar ideas, cultures, or values gradually diminish.

Third, it can intensify social division. People living in different filter bubbles often struggle to understand why others think differently. This dynamic contributes to political polarization, cultural conflict, and generational misunderstandings.

Consider a simple example. If someone frequently watches videos about vegetarian cooking, the algorithm will increasingly recommend content praising vegetarianism and criticizing meat consumption. Over time, the viewer may come to believe that eating meat is unquestionably wrong, making constructive dialogue with others more difficult.

4. Why Does This Happen?

The primary goal of most platforms is not user enlightenment, but engagement. The longer users stay on a platform, the more advertising revenue it generates.

Content that provokes strong reactions—agreement, outrage, or emotional attachment—keeps users engaged for longer periods. Since people tend to engage more with content that confirms their beliefs, algorithms learn to prioritize such material.

As a result, bias is not intentionally programmed in a moral sense, but it emerges structurally from the system’s incentives.

5. How Can We Respond?

Although we cannot fully escape algorithmic systems, we can respond more thoughtfully.

- Consume diverse content intentionally: Seek out topics and perspectives you normally avoid.

- Adjust or reset recommendations: Some platforms allow users to limit or reset personalized suggestions.

- Practice critical reflection: Ask yourself, “Why was this recommended to me?” and “What viewpoints are missing?”

- Use multiple sources: Compare information across different platforms and media outlets.

These small habits can help restore balance to our information diets.

Conclusion

Recommendation algorithms are powerful tools that connect us efficiently to information and entertainment. Yet, if we remain unaware of their built-in biases, our view of the world can slowly shrink.

Technology itself is not the enemy. The challenge lies in how consciously we engage with it. In the age of algorithms, maintaining curiosity, openness, and critical thinking is essential.

Ultimately, even in a data-driven world, the responsibility for perspective and judgment still belongs to us.

References

1. Pariser, E. (2011). The Filter Bubble: What the Internet Is Hiding from You. New York: Penguin Press.

→ This book popularized the concept of the filter bubble, explaining how personalized algorithms can limit exposure to diverse information and deepen social divisions.

2.O’Neil, C. (2016). Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. New York: Crown.

→ O’Neil examines how large-scale algorithms, including recommendation systems, can reinforce bias and inequality under the appearance of objectivity.

3.Noble, S. U. (2018). Algorithms of Oppression: How Search Engines Reinforce Racism. New York: NYU Press.

→ This work provides a critical analysis of how algorithmic systems can reproduce social prejudices, particularly regarding race and gender.