Is Human Freedom an Illusion or a Reality?

The Weight of the Question

We live with the persistent feeling that we choose.

We choose what to eat in the morning, which career to pursue, how to respond in moments of crisis. These decisions feel like ours — deliberate, intentional, free.

But what if that feeling is deceptive?

What if every thought, every intention, every choice is simply the unfolding of prior causes — neural activity, genetic predispositions, environmental influences?

Today, we step onto a stage of inquiry where two long-standing rivals confront one another: determinism and the defense of free will.

1. The Case for Determinism: Freedom as Illusion

Determinism holds that every event is caused by preceding conditions in accordance with natural laws. From this perspective, human thought and action are no exception.

Spinoza famously argued that free will is merely our ignorance of causes. We feel free because we do not perceive the chain of necessity behind our desires.

Modern neuroscience adds further tension to the debate. In Benjamin Libet’s experiments, brain activity signaling an action appeared before participants reported consciously deciding to act. If the brain initiates movement before conscious intention arises, then what becomes of free choice?

From this view, free will may be little more than post-hoc rationalization — a story we tell ourselves after the brain has already acted.

2. The Defense of Freedom: Responsibility and Moral Agency

Yet the opposing side insists: freedom must be real.

If every action were predetermined, how could moral responsibility exist? Praise, blame, justice — all would lose their grounding.

Immanuel Kant argued that freedom is a necessary condition for moral law. Jean-Paul Sartre went further, claiming that human beings are “condemned to be free,” burdened with the responsibility of choice.

Defenders of free will also caution against over-interpreting neuroscience. Libet’s experiments concern simple motor movements, not complex moral deliberation. The act of resisting temptation, reflecting on consequences, or sacrificing personal gain for ethical principles may not be reducible to automatic neural impulses.

3. A Third Path: Compatibilism

Between these poles lies compatibilism — the attempt to reconcile causality and freedom.

Philosophers such as Daniel Dennett argue that freedom does not require independence from causation. Rather, freedom consists in acting according to one’s own motives and reasoning processes, even if those processes have causal histories.

In this sense, we may inhabit a determined universe yet still possess a form of agency “worth wanting.”

4. Why This Debate Matters Today

This is not merely an abstract philosophical puzzle.

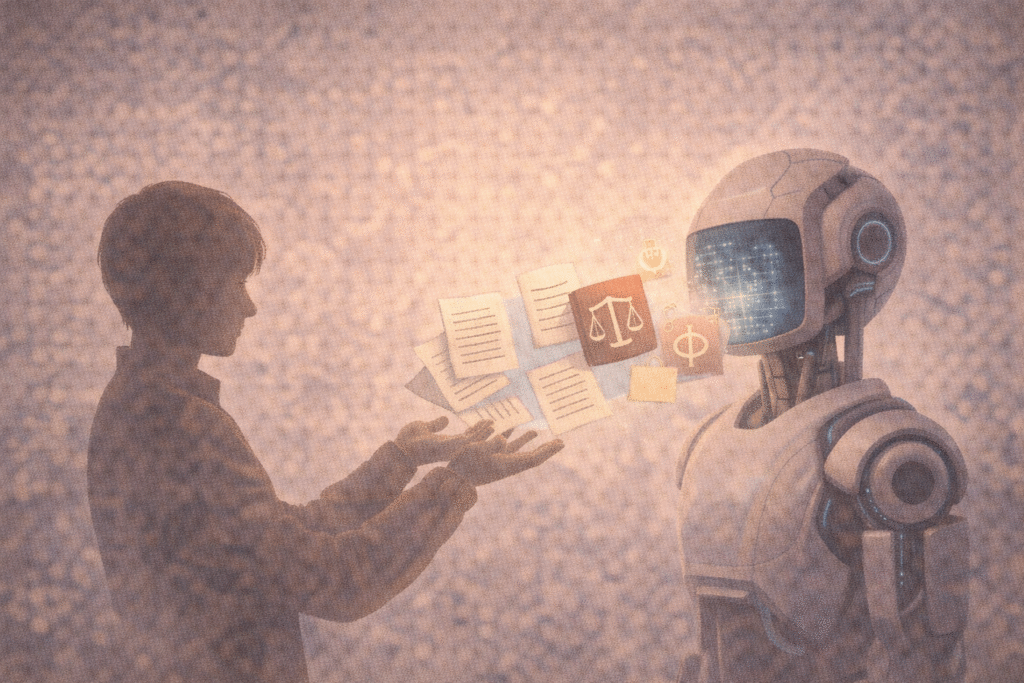

Law and Justice

If free will is illusory, should punishment give way entirely to rehabilitation?

Moral Judgment

Can we meaningfully blame or praise individuals if they could not have acted otherwise?

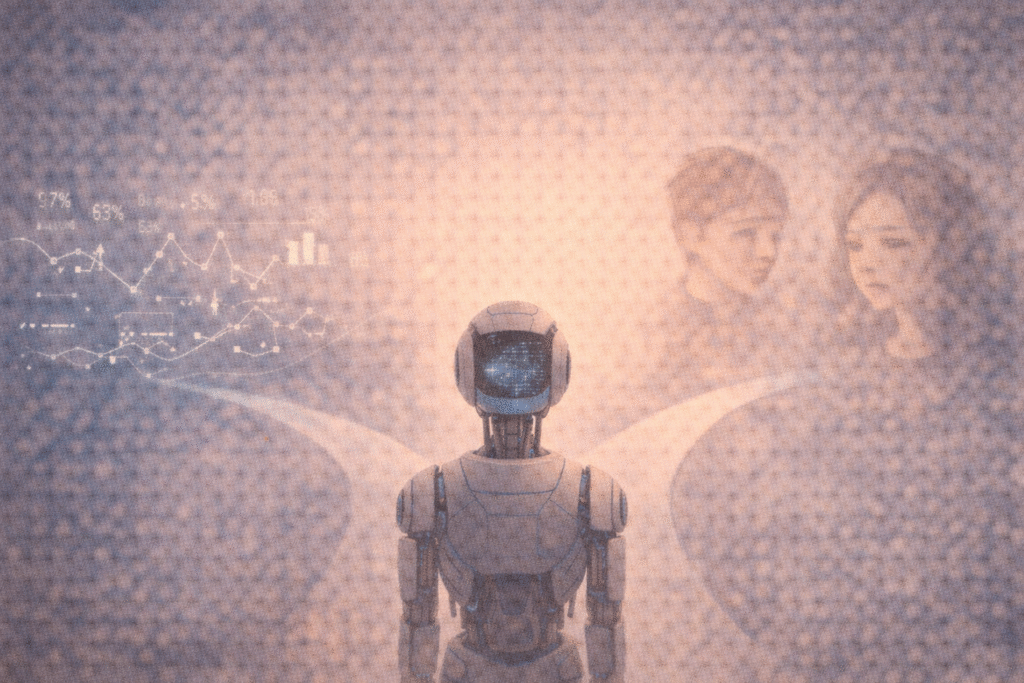

Artificial Intelligence

As AI systems become increasingly autonomous, the debate takes on new urgency. If humans themselves operate under deterministic constraints, what distinguishes human agency from machine decision-making.

Conclusion: An Open Verdict

The stage remains undecided.

Determinism offers scientific weight.

Free will defends moral dignity.

Compatibilism seeks reconciliation.

Perhaps the deeper question is not whether we are metaphysically free, but how we ought to live in light of this uncertainty.

If we are not free, who is responsible?

If we are free, how do we bear the weight of that freedom?

The trial continues — not in a courtroom, but within each of us.

References

1. Spinoza, Baruch. (1677/1994). Ethics. Translated by Edwin Curley. Princeton: Princeton University Press.

Spinoza argues that human beings are entirely subject to the causal order of nature. What we call “free will,” he contends, is merely ignorance of the causes that determine our actions. His determinist framework continues to serve as a foundational critique of autonomous agency.

2. Kant, Immanuel. (1788/1997). Critique of Practical Reason. Translated by Mary Gregor. Cambridge: Cambridge University Press.

Kant maintains that moral responsibility presupposes freedom. For him, free will is not an empirical observation but a necessary postulate of practical reason. Without freedom, the coherence of moral law and ethical accountability would dissolve.

3. Sartre, Jean-Paul. (1943/1992). Being and Nothingness. Translated by Hazel E. Barnes. New York: Washington Square Press.

Sartre famously describes human beings as “condemned to be free.” In his existentialist account, freedom is inseparable from responsibility, and individuals continuously define themselves through their choices. His perspective intensifies the debate by grounding freedom in lived experience rather than abstract metaphysics.

4. Libet, Benjamin. (2004). Mind Time: The Temporal Factor in Consciousness. Cambridge, MA: Harvard University Press.

Libet’s neuroscientific experiments suggest that neural activity associated with decision-making can precede conscious awareness. This finding has been widely interpreted as evidence challenging traditional conceptions of free will, reinforcing determinist interpretations from a scientific perspective.

5. Dennett, Daniel C. (1984/2003). Elbow Room: The Varieties of Free Will Worth Wanting. Cambridge, MA: MIT Press.

Dennett defends compatibilism, arguing that meaningful forms of freedom can exist within a causally structured universe. Rather than seeking absolute metaphysical independence, he reframes free will as the kind of agency that sustains responsibility, rational deliberation, and social cooperation.